There’s this theory that AI is going to replace all the engineers and then coming for product managers and everyone else.

The theory makes sense, just like the theory about the rational consumer make sense.

This does not mean though, that it works in real life, every time, all the time, (and certainly not immediately).

Reality rarely looks like the examples you find on LinkedIn

You’ve probably seen the AI hacks, prompt hacks, prompt recommendations, and detailed descriptions of how to prompt the AI of your choice to spit out something that is useful.

These examples do not represent the use of AI by most people.

Seriously, ask three colleagues or friends to share their ChatGPT history with you. The prompts are probably underwhelming - at least compared to those elaborate examples everyone throws around.

AI is super helpful, if you can write a prompt that allows it to do its best work. The best work is based on the data it was trained with. So if the internet is your oyster, you have to be very specific about the context and the reality of what you are asking for.

Detour: ChatGPT “knows” that “everyone” loves boobs

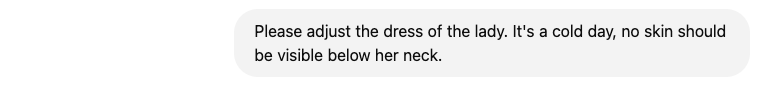

I have a an ongoing conversation with ChatGPT to create images for my writing in this newsletter - think ballgowns and silver tiaras.

Here’s the prompt. As you can see I am starting to get a little exasperated by the continuous boob display:

Please generate a pop art image of a lady welcoming a group of people into a room. She is wearing a ball gown with a high neckline (no cleavage, no boobs) and a silver tiara in her hair.

So - this is what ChatGPT understands as a high-neck ball gown. Interesting - let’s make it a bit clearer.

Not sure if I can be more explicit than that? Anyway, here’s the result:

I mean, we now have more windows and we’ve lost the whole idea of welcoming people - but the cleaveage? Call me antiquated, but this is not what I had mind with my prompt.

And I am pretty sure that a human designer would have gotten the memo already with my first request.

How specific is specific enough?

So, here’s the thing - I can probably refine the prompt until I get the exact result that I need. The easiest solution? As soon as I am asking for an emperor, the cleavage is not an issue anymore.

Why does that matter?

If you want to be unique, if you want to create something different to the norm, if you want to innovate - then you’ll have to be REALLY specific in your requests. So specific that your requirements suddenly aren’t just four lines plugged into a generalized template you found on LinkedIn.

You need to create really detailed requirements.

You need to give context, a lot of context.

You need to clearly explain the scope, and where it stops.

You need to explain really clearly what you are trying to achieve. And your AI companion will do EXACTLY what you are asking for.

Or at least as exactly as it can decipher from the data it was trained on - which may or may not be relevant to your specific niche.

The advantage of knowledge in context

So, here’s the thing.

If your Product Managers can write a prompt that is so specific that they can get the required results of an AI faster than form your own engineers - imagine what your engineers can do who actually understand the industry you operate in, the existing stack you are building on, and who have ideas that aren’t muddled by cleaveage and boobs (hopefully).

That’s where AI comes in as your little helper. AI is fast, a lot faster than your engineers, without doubt. And yet, your engineers are probably better at getting it to do something that makes sense and is useful for your customers.

If a four line prompt could have created your solution - someone else would have already done it.

At this point in the race ideas are a dime a dozen, lazy execution is worthless, and unchallenged viewpoints create the same beige boredom for everyone.

You need diversity of opinion, diversity of experience, different angles, and the ability to challenge AI and yourself.

There’s always more than one solution. And they don’t come in bullet points.